Hyperparameter tuning

Hyperparameter tuning, often referred to as hyperparameter optimization, is the process of finding the best set of hyperparameters for a machine learning model to optimize its performance on a given task. Hyperparameters are configuration settings that are not learned from the data but are set prior to training a model. Properly tuned hyperparameters can significantly improve a model's accuracy, generalization, and efficiency. Here's an overview of hyperparameter tuning in machine learning:

-

Hyperparameters vs. Parameters:

- Parameters are values that the model learns from the training data, such as weights and biases in neural networks.

- Hyperparameters are settings that control the learning process but are not learned from the data. Examples include learning rate, batch size, and the architecture of the model itself.

-

Why Hyperparameter Tuning is Important:

- Hyperparameters significantly impact a model's performance and generalization to new data.

- An improperly tuned model may overfit (high variance) or underfit (high bias), leading to suboptimal results.

-

Hyperparameter Search Space:

- The search space defines the possible values or ranges for each hyperparameter. For example, the learning rate might be searched within the range [0.001, 0.1].

- The search space can be discrete, continuous, or a combination of both.

-

Hyperparameter Optimization Techniques:

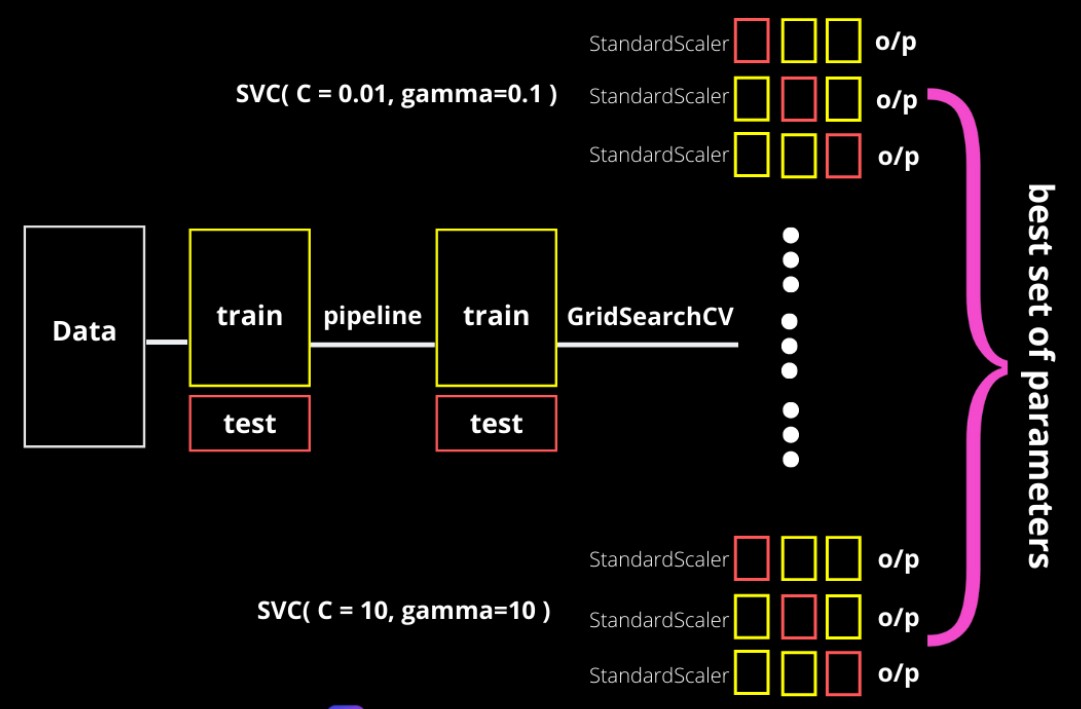

- Grid Search: Grid search exhaustively searches predefined combinations of hyperparameters within the specified search space. It is simple but can be computationally expensive.

- Random Search: Random search samples hyperparameters randomly from the search space. It is more efficient than grid search and often finds good configurations.

- Bayesian Optimization: Bayesian optimization models the objective function (e.g., model performance) and explores hyperparameter combinations based on uncertainty estimates, focusing on promising regions.

- Genetic Algorithms: Genetic algorithms use principles from natural selection to evolve a population of hyperparameter configurations over generations, improving the best-performing ones.

- Gradient-Based Optimization: Some libraries and frameworks offer gradient-based methods for hyperparameter tuning, where gradients are estimated to update hyperparameters during training.

- Automated Machine Learning (AutoML): AutoML platforms automate the process of hyperparameter tuning along with other steps like feature selection, model selection, and data preprocessing.

-

Cross-Validation:

- Cross-validation is essential for hyperparameter tuning. It helps assess a model's performance on various subsets of the training data, reducing the risk of overfitting to a specific dataset or validation set.

- Common cross-validation techniques include k-fold cross-validation and stratified sampling.

-

Evaluation Metrics:

- Choose appropriate evaluation metrics that align with the problem you are solving. Common metrics include accuracy, F1-score, mean squared error (MSE), and others based on the task (classification, regression, etc.).

-

Early Stopping:

- During hyperparameter tuning, use early stopping to prevent models from training for too long and overfitting. Early stopping monitors validation performance and stops training when performance degrades.

-

Visualization and Analysis:

- Visualize the results of hyperparameter tuning experiments to understand trends and make informed decisions about which configurations to explore further.

-

Iterative Process:

- Hyperparameter tuning is often an iterative process. Start with a broad search, identify promising configurations, and refine the search space based on initial results.

Hyperparameter tuning is a critical step in building machine learning models that perform well in practice. It requires patience and computational resources but can lead to substantial improvements in model performance and robustness.