Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of artificial intelligence and deep learning that focuses on the interaction between computers and human language. Deep learning has revolutionized NLP, enabling significant advancements in understanding, interpreting, and generating human language.

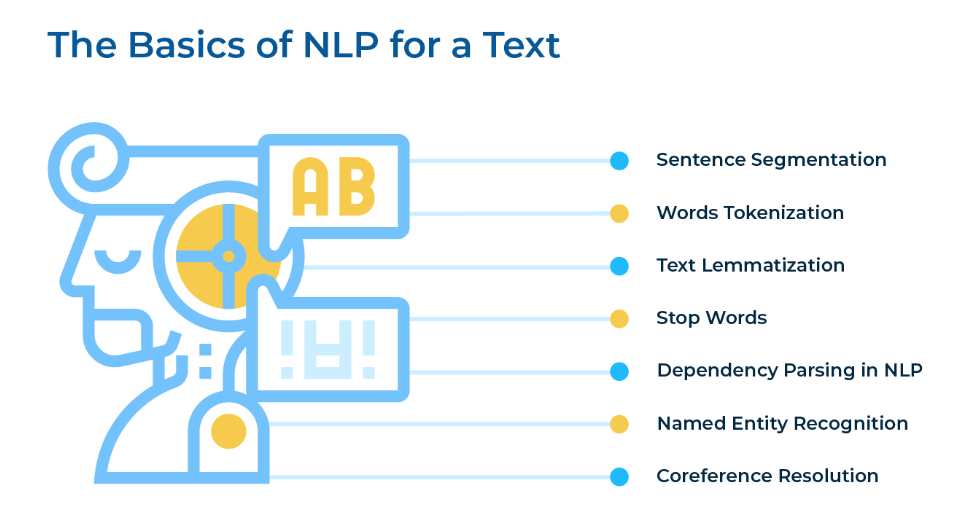

Key Components of NLP

NLP involves several core tasks, each with its own challenges and methods:

a. Text Preprocessing

- Tokenization: Splitting text into words, phrases, or other meaningful elements (tokens).

- Normalization: Converting text to a standard format (e.g., lowercasing, removing punctuation).

- Stemming/Lemmatization: Reducing words to their base or root form.

- Stopword Removal: Eliminating common words (e.g., "and", "the") that do not carry significant meaning.

b. Language Modeling

- Definition: Predicting the next word or sequence of words in a sentence.

- Applications: Text generation, autocomplete, machine translation.

Deep Learning Techniques in NLP

Several deep learning architectures have been pivotal in advancing NLP:

a. Recurrent Neural Networks (RNNs)

- Description: Designed for sequential data, where connections form cycles, allowing information to persist.

- Variants: Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), which address the vanishing gradient problem and can capture long-term dependencies.

b. Convolutional Neural Networks (CNNs)

- Description: Originally used for image processing, CNNs can also be applied to text for tasks like sentence classification by capturing local patterns.

- Usage: Sentiment analysis, text classification.

c. Attention Mechanisms

- Description: Allow models to focus on specific parts of the input sequence, improving performance on tasks like translation and summarization.

- Self-Attention: Enables the model to consider different parts of the sequence simultaneously.

d. Transformers

- Description: A type of model architecture that relies entirely on self-attention mechanisms to handle long-range dependencies.

- Significance: Form the basis of state-of-the-art models like BERT, GPT, and T5.

- Architecture: Consists of an encoder-decoder structure, where the encoder processes the input sequence and the decoder generates the output sequence.

Pretrained Language Models

Pretrained language models have become the cornerstone of modern NLP:

a. BERT (Bidirectional Encoder Representations from Transformers)

- Description: Pretrained on large corpora to understand context bidirectionally.

- Applications: Question answering, sentiment analysis, named entity recognition.

b. GPT (Generative Pre-trained Transformer)

- Description: Focuses on text generation by predicting the next word in a sequence.

- Applications: Text completion, language translation, conversational agents.

c. T5 (Text-to-Text Transfer Transformer)

- Description: Treats every NLP task as a text-to-text problem, enabling a unified approach to multiple tasks.

- Applications: Translation, summarization, classification.

NLP Tasks and Applications

NLP encompasses a wide range of tasks and applications, each benefiting from deep learning advancements:

a. Text Classification

- Examples: Sentiment analysis, spam detection, topic categorization.

- Techniques: RNNs, CNNs, transformers.

b. Named Entity Recognition (NER)

- Description: Identifying entities like names, dates, and locations in text.

- Applications: Information extraction, knowledge graphs.

c. Machine Translation

- Description: Translating text from one language to another.

- Techniques: RNNs, attention mechanisms, transformers.

d. Text Generation

- Examples: Story generation, code generation, poetry composition.

- Techniques: GPT models, RNNs, transformers.

e. Question Answering

- Description: Automatically answering questions posed by humans in natural language.

- Techniques: BERT, transformers, attention mechanisms.

f. Summarization

- Description: Producing concise summaries of longer texts.

- Techniques: Transformers, sequence-to-sequence models.

Challenges in NLP

Despite significant advancements, NLP still faces several challenges:

a. Ambiguity

- Types: Lexical (word sense), syntactic (sentence structure), semantic (meaning).

- Example: "I saw the man with the telescope" (Who has the telescope?).

b. Context and Understanding

- Description: Understanding context, idioms, and nuances in human language.

- Example: "He kicked the bucket" (literal vs. idiomatic meaning).

c. Resource Requirements

- Description: Training large models requires substantial computational resources and data.

- Example: GPT-3, with 175 billion parameters, necessitates extensive computational power.

d. Bias and Fairness

- Description: Models can inadvertently learn and propagate biases present in training data.

- Example: Gender, racial, and cultural biases in language models.

6. Future Directions in NLP

- Explainability: Making models more interpretable and understandable to humans.

- Multilingual Models: Developing models that can understand and generate multiple languages.

- Resource Efficiency: Creating more efficient models that require fewer computational resources.

- Ethics and Fairness: Addressing biases and ensuring fair and equitable NLP systems.

NLP in deep learning continues to evolve, driven by advancements in model architectures, pretraining techniques, and computational capabilities. These innovations are making it possible to develop systems that can understand, interpret, and generate human language with unprecedented accuracy and fluency.

Enroll Now

- Python Programming

- Machine Learning