Digital image processing

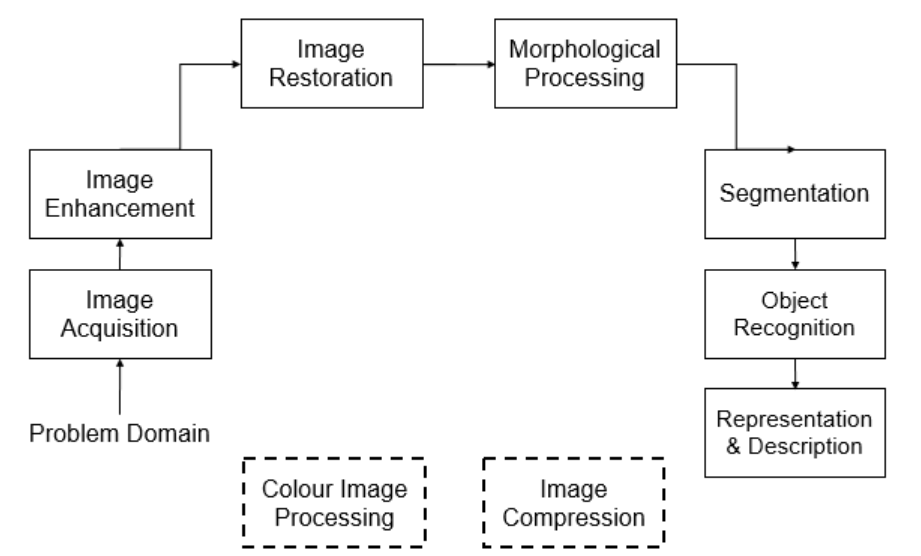

Digital image processing involves a series of steps to manipulate and enhance images using computer algorithms.

Basic steps typically involved in the process:

-

Image Acquisition: This is the initial step where images are captured using cameras, scanners, satellites, or other imaging devices. The quality of the acquired image can significantly impact the subsequent processing steps.

-

Preprocessing: Preprocessing involves preparing the image for further analysis. This step includes operations such as noise reduction, image enhancement, and color correction. Common preprocessing techniques include median filtering, Gaussian blurring, and histogram equalization.

-

Image Enhancement: Image enhancement techniques are used to improve the visual quality of images. These techniques adjust the contrast, brightness, and sharpness of the image to make it more suitable for human perception or analysis algorithms.

-

Image Restoration: Image restoration aims to recover the original image from a degraded or noisy version. Restoration techniques attempt to reverse the effects of blurring, noise, or other degradations introduced during image acquisition.

-

Color Correction and Manipulation: Color correction adjusts color balance and removes color casts to ensure accurate representation. Color manipulation techniques can be used to enhance specific features or for artistic effects.

-

Image Compression: Image compression reduces the amount of data required to represent an image while attempting to retain as much visual quality as possible. Lossy and lossless compression methods are used to decrease file sizes for storage and transmission.

-

Segmentation: Segmentation divides an image into meaningful regions or objects. It's a crucial step for object detection, tracking, and recognition tasks. Techniques like thresholding, clustering, and edge-based methods are used for segmentation.

-

Feature Extraction: Feature extraction involves identifying and extracting relevant information from the image to describe its content. These features can be texture, color, shape, or any other characteristic that helps in subsequent analysis.

-

Image Transformation: Image transformation involves changing the representation of an image while preserving its content. Common transformations include rotation, scaling, and geometric transformations.

-

Spatial Filtering: Spatial filtering is used to process an image by applying filters (kernels) to its pixels. Filtering operations like edge detection, smoothing, and sharpening can enhance or extract specific features.

-

Frequency Domain Processing: Frequency domain processing involves converting an image from the spatial domain to the frequency domain using techniques like the Fourier transform. This enables operations such as filtering out specific frequencies or enhancing certain patterns.

-

Image Analysis and Interpretation: In this step, the processed image is analyzed to extract meaningful information. Object detection, recognition, and classification are common tasks that fall under image analysis.

-

Visualization and Display: Finally, the processed image is visualized and displayed on screens, monitors, or printed media for interpretation by humans or further analysis by machines.

These steps may vary based on the specific application and goals of the image processing task.

The process can involve a combination of techniques from various fields, including signal processing, computer vision, and machine learning.

Enroll Now

- Python Programming

- Machine Learning