Fundamentals of image formation

The fundamentals of image formation remain crucial in deep learning for computer vision, as they underlie the way deep neural networks process and understand images.

While deep learning methods can automatically learn features from data, having an understanding of image formation can help in designing better models, preprocessing data, and interpreting network behavior.

How image formation concepts relate to deep learning in computer vision:

-

Feature Learning: Deep learning models learn hierarchical features from raw image data. Understanding how light interacts with objects and how cameras capture this information can guide the design of architectures that capture relevant features at different levels.

-

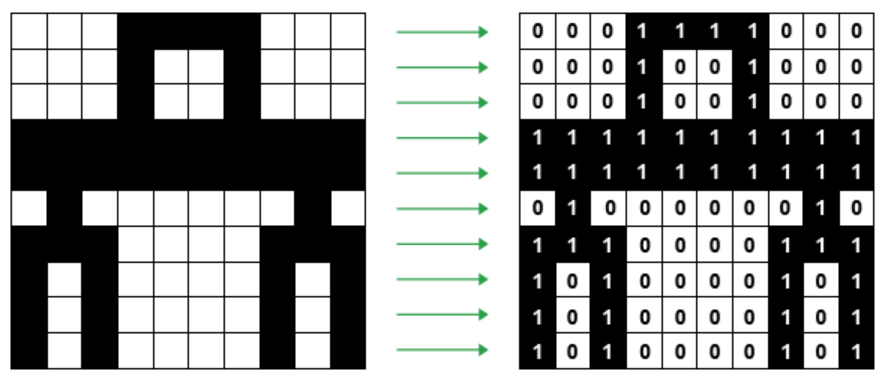

Convolutional Neural Networks (CNNs): CNNs are inspired by the human visual system and leverage local connectivity. Convolutional layers in CNNs perform operations similar to image filtering, where small kernels scan the input to capture patterns and features. These operations are rooted in the way light interacts with objects and passes through lenses.

-

Normalization and Preprocessing: Knowledge of image formation can help guide preprocessing steps such as histogram equalization, color normalization, and noise reduction. These preprocessing techniques can enhance the quality of training data and improve model performance.

-

Data Augmentation: Augmenting data with transformations like rotation, flipping, and cropping is inspired by the fact that real-world images can appear in various orientations and scales due to camera and object movements.

-

Camera Geometry and Transformation: Understanding camera projection and transformation helps in tasks like data rectification, where images are transformed to remove distortion or align them to a common viewpoint. This is important for tasks like stereo vision and 3D reconstruction.

-

Loss Functions and Metrics: Loss functions used in deep learning often take into account the spatial relationships of objects in images. For instance, mean squared error or intersection over union (IoU) considers the overlap between predicted and ground truth bounding boxes.

-

Image Segmentation: Image segmentation techniques are influenced by the way objects are defined by their boundaries in images. Concepts like contour detection and edge detection stem from the way light transitions between objects and their surroundings.

-

Interpretability: Understanding how deep learning models process images can help in interpreting model decisions. Techniques like gradient-based saliency maps provide insights into which parts of an image contribute most to a model's prediction.

-

Transfer Learning: Transfer learning involves transferring knowledge from one task or dataset to another. Knowledge of image formation can guide the selection of pre-trained models or fine-tuning strategies that suit the image characteristics of the target task.

While deep learning models are data-driven and can learn intricate patterns automatically, a solid foundation in image formation can provide a deeper understanding of how these models operate and help in making informed decisions throughout the training and deployment phases.

Enroll Now

- Python Programming

- Machine Learning