Transfer learning

Transfer learning is a machine learning technique, commonly used in deep learning, where a model trained on one task is adapted or fine-tuned for a different but related task. It's a powerful method that leverages the knowledge learned from a large and typically diverse source dataset to improve the performance of a model on a smaller, target dataset. Transfer learning has been instrumental in achieving state-of-the-art results in various domains, particularly in computer vision and natural language processing. Here's an overview of transfer learning in deep learning:

-

Pre-trained Models:

- Transfer learning starts with a pre-trained model on a large and general dataset. These models are often called pre-trained or base models.

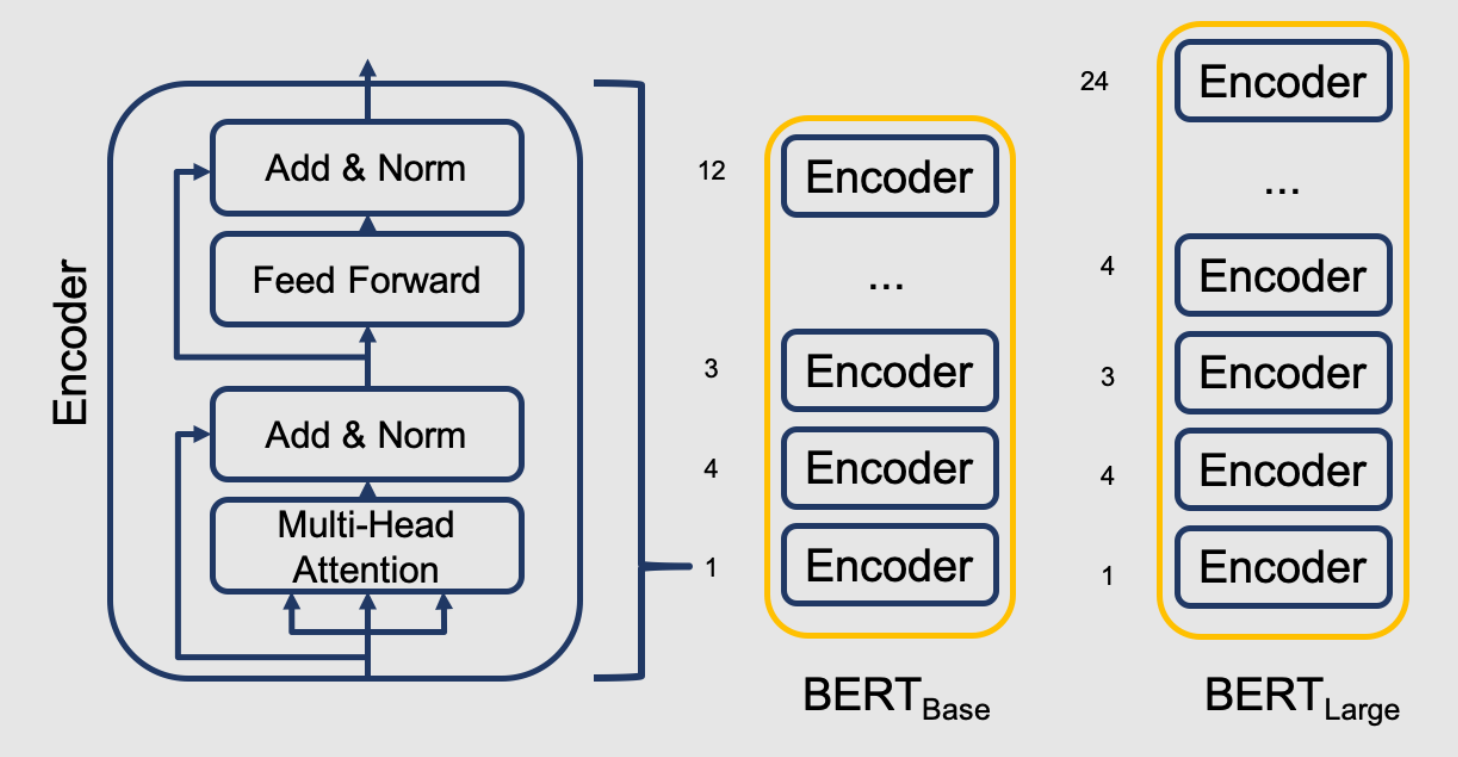

- Common pre-trained models in computer vision include VGG, ResNet, Inception, and MobileNet, while in natural language processing, models like Word2Vec, GloVe, and BERT are popular.

-

Target Task and Dataset:

- After obtaining a pre-trained model, the next step is to define a target task and gather a dataset specific to that task.

- The target task should be related to the source task, although it may have some differences.

-

Fine-Tuning:

- Fine-tuning is the process of modifying the pre-trained model to adapt it to the target task. It involves updating some or all of the model's parameters based on the target dataset.

- Typically, earlier layers of the pre-trained model, which capture more general features, are kept frozen, while later layers are fine-tuned to learn task-specific features.

- The learning rate for fine-tuning is often set lower than the rate used during pre-training to ensure that the pre-learned knowledge is preserved.

-

Transfer Learning Strategies:

- There are different strategies for transfer learning, depending on the similarity between the source and target tasks:

- Feature Extraction: In this approach, the pre-trained model acts as a feature extractor, and the extracted features are used as input to a new model specific to the target task. This is common in computer vision.

- Fine-Tuning All Layers: In some cases, you might fine-tune all layers of the pre-trained model if the source and target tasks are very similar.

- Domain Adaptation: Domain adaptation techniques are used when the source and target domains differ substantially but share some commonality.

- Multi-Task Learning: Multi-task learning involves training a single model on multiple related tasks simultaneously, leveraging shared knowledge.

- There are different strategies for transfer learning, depending on the similarity between the source and target tasks:

-

Benefits of Transfer Learning:

- Transfer learning offers several advantages, including faster convergence, reduced data requirements for the target task, and improved generalization.

- It is especially useful when you have a limited amount of labeled data for the target task.

-

Challenges:

- Transfer learning may not always work well if the source and target tasks are too dissimilar.

- There can be issues with domain shift, where the data distribution of the source and target tasks differs significantly.

-

Applications:

- Transfer learning has been successfully applied in various domains, such as image classification, object detection, machine translation, sentiment analysis, and more.

In summary, transfer learning is a valuable technique in deep learning that allows models to leverage knowledge learned from one task and apply it to another, related task. It has played a pivotal role in improving the efficiency and effectiveness of deep learning models in various real-world applications.

Enroll Now

- Python Programming

- Machine Learning