Neural Network

In deep learning, a neural network is a computational model inspired by the structure and functioning of biological neural networks, such as the human brain. Neural networks are composed of interconnected nodes or artificial neurons arranged in layers. These layers include an input layer, one or more hidden layers, and an output layer. Neural networks are used for a wide range of machine learning tasks, including classification, regression, image recognition, natural language processing, and more.

Basic Structure of Neural Networks

Neural networks consist of three primary types of layers:

- Input Layer: This is where the network receives initial data. Each neuron in this layer represents a feature from the input data.

- Hidden Layers: These layers are where the computations and transformations happen. A neural network can have multiple hidden layers, contributing to its depth and complexity.

- Output Layer: This layer produces the final output of the network, corresponding to the prediction or classification.

Neurons and Connections

- Neurons: Basic units of a neural network that receive input, apply a transformation via an activation function, and pass the output to the next layer.

- Weights: Parameters that connect neurons between layers, representing the strength and direction of the connection.

- Biases: Additional parameters added to the inputs of each neuron to shift the activation function, allowing the model to fit the data better.

Activation Functions

Activation functions introduce non-linearity into the network, enabling it to learn complex patterns:

- Sigmoid: Outputs values between 0 and 1, useful for binary classification.

- ReLU (Rectified Linear Unit): Outputs the input directly if it's positive; otherwise, it outputs zero. It helps in mitigating the vanishing gradient problem.

- Tanh: Outputs values between -1 and 1, used for zero-centered outputs.

- Leaky ReLU: A variation of ReLU that allows a small gradient when the input is negative, helping to avoid dying neurons.

Training Neural Networks

- Forward Propagation: The process of passing input data through the network to obtain an output.

- Loss Function: Measures the difference between the predicted output and the actual output. Common loss functions include Mean Squared Error (MSE) for regression and Cross-Entropy Loss for classification.

- Backpropagation: The process of adjusting weights to minimize the loss. It involves calculating the gradient of the loss function with respect to each weight and updating the weights in the opposite direction of the gradient.

Optimization Algorithms

Optimization algorithms are used to minimize the loss function by adjusting the weights:

- Stochastic Gradient Descent (SGD): Updates weights based on a random subset of data, which helps in faster convergence.

- Adam (Adaptive Moment Estimation): Combines the advantages of two other extensions of SGD—AdaGrad and RMSProp—providing adaptive learning rates for each parameter.

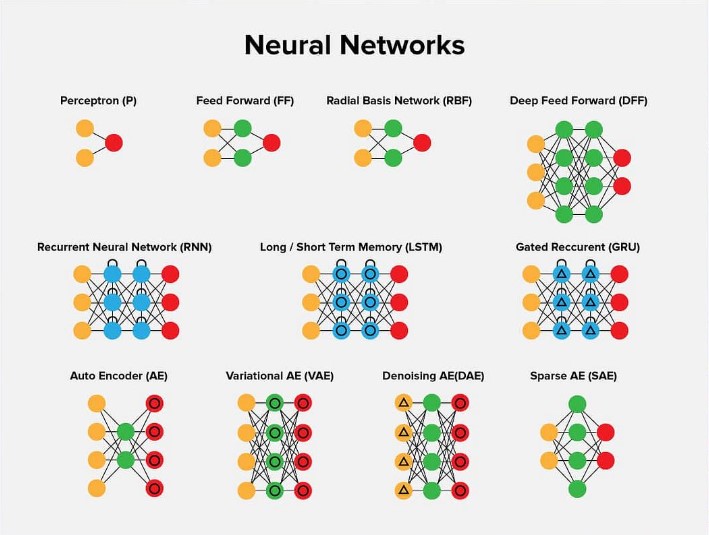

Types of Neural Networks

- Feedforward Neural Networks (FNN): The simplest type where connections do not form cycles. Data moves in one direction, from input to output.

- Convolutional Neural Networks (CNN): Designed for processing structured grid data like images. They use convolutional layers to automatically and adaptively learn spatial hierarchies of features.

- Convolutional Layers: Perform convolutions that capture spatial features.

- Pooling Layers: Reduce the dimensionality of the data while retaining important features.

- Recurrent Neural Networks (RNN): Specialized for sequential data, where connections form cycles. Each neuron can use its own output as part of the input for the next step.

- LSTM (Long Short-Term Memory): A type of RNN designed to remember information for long periods, solving the vanishing gradient problem.

- GRU (Gated Recurrent Unit): A variant of LSTM with a simplified structure.

- Generative Adversarial Networks (GANs): Consist of two networks—a generator that creates data and a discriminator that evaluates the data. They are trained together in a zero-sum game framework.

Applications of Neural Networks

- Image Recognition: Identifying and classifying objects within images.

- Speech Recognition: Converting spoken language into text.

- Natural Language Processing (NLP): Tasks like translation, sentiment analysis, and text generation.

- Recommendation Systems: Predicting user preferences and suggesting relevant items.

- Autonomous Vehicles: Object detection, path planning, and decision-making in self-driving cars.

Challenges and Considerations

- Overfitting: When a model performs well on training data but poorly on unseen data. Techniques like dropout and regularization can help mitigate this.

- Computational Resources: Training deep neural networks requires substantial computational power and memory.

- Data Requirements: Deep learning models require large amounts of labeled data to achieve high performance.

Neural networks are the backbone of deep learning, enabling the development of models that can understand and interpret complex data patterns, leading to advancements across various domains and applications.

Enroll Now

- Python Programming

- Machine Learning