Artificial Neural Network

An Artificial Neural Network (ANN), commonly referred to as a neural network, is a computational model inspired by the structure and functioning of biological neural networks, such as the human brain. Neural networks are a fundamental component of deep learning and have gained prominence due to their ability to model and learn complex relationships in data.

Structure of ANN

ANNs consist of three main types of layers:

- Input Layer: Receives the initial data.

- Hidden Layers: Intermediate layers that perform computations and transformations on the input data. ANNs can have multiple hidden layers, contributing to their depth and ability to model complex functions.

- Output Layer: Produces the final output, such as a prediction or classification.

Neurons and Connections

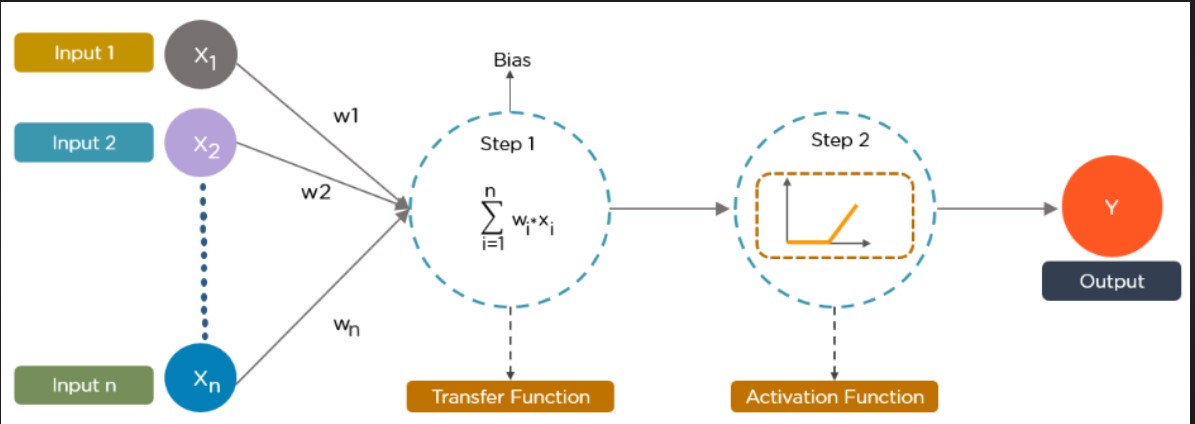

- Neurons: The basic units of an ANN that process input, apply an activation function, and pass the result to the next layer.

- Weights: Parameters that connect neurons between layers, representing the strength and direction of the connection. These are adjusted during training.

- Biases: Additional parameters added to the input of each neuron to shift the activation function, improving the model's ability to fit the data.

Activation Functions

Activation functions introduce non-linearity into the network, enabling it to learn and model complex patterns:

- Sigmoid: Outputs values between 0 and 1, useful for binary classification.

- ReLU (Rectified Linear Unit): Outputs the input directly if it is positive; otherwise, it outputs zero. It helps mitigate the vanishing gradient problem.

- Tanh: Outputs values between -1 and 1, used for zero-centered outputs.

- Leaky ReLU: A variation of ReLU that allows a small gradient when the input is negative, helping to avoid dying neurons.

Training ANNs

The training process involves several key steps:

- Forward Propagation: Input data is passed through the network to generate predictions.

- Loss Calculation: The difference between the predicted output and the actual output is measured using a loss function (e.g., Mean Squared Error for regression, Cross-Entropy Loss for classification).

- Backpropagation: The gradients of the loss with respect to each weight are calculated. These gradients are used to update the weights in the opposite direction of the gradient, minimizing the loss.

- Optimization Algorithms: Methods like Stochastic Gradient Descent (SGD) and Adam are used to adjust the weights based on the gradients calculated during backpropagation.

Common Architectures of ANNs

While the basic structure of ANNs is quite simple, they can be combined and extended in various ways to handle different tasks:

- Feedforward Neural Networks (FNN): The simplest type of ANN where connections do not form cycles. Data moves in one direction, from input to output.

- Multilayer Perceptrons (MLP): A type of feedforward network with multiple hidden layers, capable of learning non-linear functions.

- Recurrent Neural Networks (RNN): Designed for sequential data, where connections form cycles and each neuron can use its own output as part of the input for the next step. They are effective for tasks like time series prediction and natural language processing.

- Convolutional Neural Networks (CNN): Specialized for processing structured grid data like images, using convolutional layers to capture spatial hierarchies of features.

Applications of ANNs

ANNs are used in a wide range of applications across various domains:

- Image Recognition: Identifying and classifying objects within images.

- Speech Recognition: Converting spoken language into text.

- Natural Language Processing (NLP): Tasks such as language translation, sentiment analysis, and text generation.

- Recommendation Systems: Predicting user preferences and suggesting relevant items.

- Healthcare: Predictive diagnostics, medical image analysis, and personalized treatment plans.

- Finance: Fraud detection, stock market prediction, and risk assessment.

Challenges and Considerations

- Overfitting: When a model performs well on training data but poorly on unseen data. Techniques such as dropout, regularization, and data augmentation can help mitigate this issue.

- Computational Resources: Training deep ANNs requires significant computational power and memory, often necessitating the use of GPUs.

- Data Requirements: ANNs typically require large amounts of labeled data to achieve high performance.

- Hyperparameter Tuning: Choosing the right architecture, number of layers, learning rate, and other hyperparameters is crucial and often requires extensive experimentation.

Future Trends

- Explainable AI: Efforts to make ANNs more interpretable and understandable to humans.

- Federated Learning: Training models across decentralized devices while keeping data localized.

- Edge AI: Running ANN models on edge devices like smartphones and IoT devices for real-time processing.

Artificial Neural Networks form the backbone of many modern AI applications, leveraging their ability to learn and generalize from data to perform complex tasks with high accuracy. Their versatility and power continue to drive advancements in various fields, making them an essential tool in the deep learning toolkit.

Enroll Now

- Python Programming

- Machine Learning