K-Nearest Neighbors Algorithm

KNN (K-Nearest Neighbors) is a simple yet effective supervised machine learning algorithm used for both classification and regression tasks.

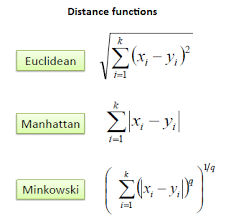

In KNN, the algorithm finds the K nearest data points to a given input data point in the training dataset based on a distance metric (e.g., Euclidean distance).

The class or value of the input data point is then predicted by taking the majority class or mean value of the K nearest neighbors.

For example, in a binary classification task, if K=5 and the 5 nearest neighbors to the input data point are 3 positive and 2 negative, the algorithm would predict the input as positive.

KNN has some advantages, such as being easy to implement and interpret, but it also has some limitations, including its sensitivity to irrelevant and redundant features, and the need to determine the optimal value of K.

Additionally, the algorithm can be computationally expensive if the training dataset is large, or the feature space is high-dimensional. Despite its limitations, KNN is still a widely used and popular algorithm, particularly for datasets with small or medium-sized feature spaces and a moderate number of data points.

Enroll Now

- Python Programming

- Machine Learning