Ensemble Learning

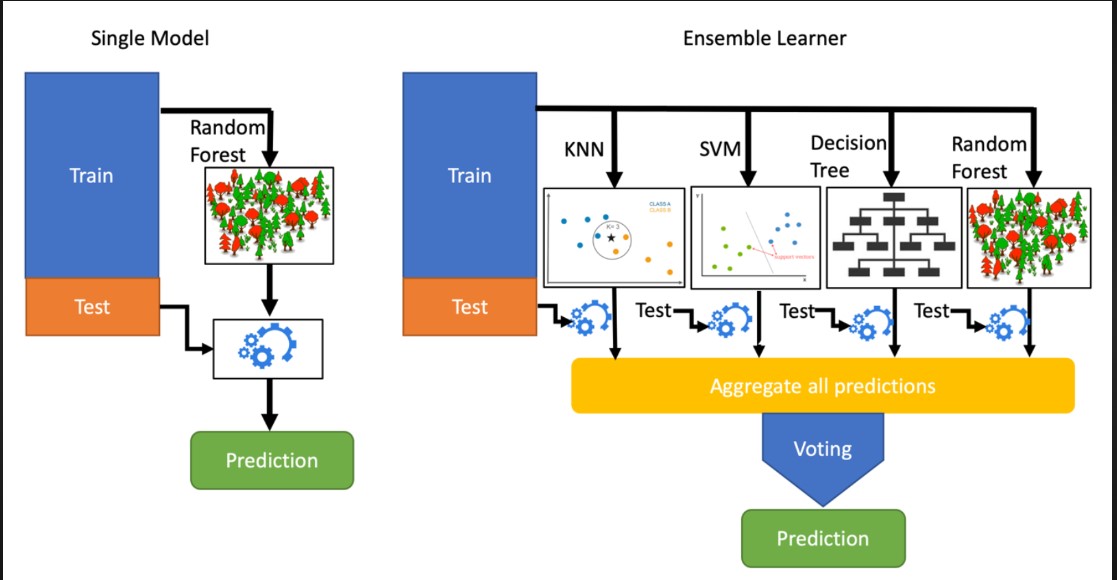

Ensemble learning is a machine learning technique that combines the predictions or decisions of multiple models (often called "base models" or "learners") to improve overall performance.

The idea behind ensemble learning is that by aggregating the output of multiple models, you can often achieve better results than with a single model.

Ensemble methods are widely used in machine learning because they can increase accuracy, reduce overfitting, and enhance robustness.

Some key concepts and popular ensemble methods in machine learning:

-

Voting Ensembles:

- In voting ensembles, multiple base models are trained independently, and their predictions are combined to make a final decision. There are two main types:

- Hard Voting: Each base model's prediction is treated as a "vote," and the most frequent prediction is selected as the final output.

- Soft Voting: Instead of discrete votes, the models provide probability scores for each class, and the average probabilities are used to make the final decision.

- In voting ensembles, multiple base models are trained independently, and their predictions are combined to make a final decision. There are two main types:

-

Bagging (Bootstrap Aggregating):

- Bagging involves training multiple instances of the same base model on different subsets of the training data. The final prediction is often an average or majority vote of the predictions made by each base model.

- A popular example of bagging is the Random Forest algorithm, which uses decision trees as base models.

-

Boosting:

- Boosting aims to improve the accuracy of weak learners (models that perform slightly better than random guessing) by combining them into a strong learner. It assigns different weights to training instances, focusing on the ones that were misclassified by previous models.

- Popular boosting algorithms include AdaBoost, Gradient Boosting, and XGBoost.

-

Stacking (Stacked Generalization):

- Stacking combines predictions from multiple base models using a meta-learner (or a "level-2" model) that learns to make predictions based on the outputs of the base models.

- Stacking allows you to leverage the strengths of different types of models and can be a powerful technique when implemented correctly.

-

Blending:

- Blending is similar to stacking but involves dividing the training dataset into two parts: one for training the base models and the other for training the meta-learner. It's often used when you have a limited dataset.

-

Bootstrapped Ensembles:

- These ensembles involve training multiple models on bootstrapped samples of the training data. Bagging and Random Forest are examples of bootstrapped ensembles.

-

Gradient Boosting Machines (GBMs):

- GBMs are a class of ensemble methods that build decision trees sequentially, with each tree correcting the errors of the previous ones. Gradient Boosting and XGBoost are popular implementations.

Ensemble learning is a versatile technique that can be applied to various machine learning tasks, including classification, regression, and even anomaly detection.

When using ensemble methods, it's essential to choose diverse base models or learners to ensure that they provide different perspectives and contribute to improved performance.

Enroll Now

- Python Programming

- Machine Learning